Google’s Product Director Turned Web3 Founder Andrey Doronichev Warns of Deep to Cheap Fakes

Optic has helped identify over 100 million fake NFT images

As Director of Product at Google and Head of Mobile at YouTube, Andrey Doronichev was responsible for creating the new reality of social media.

With a personal mission of liberating information, he believed if anyone had access to the distribution of information, the world wouldn’t have dark corners. The Arab Spring proved this to be true, but it came at a cost as the same technologies used to liberate were turned into weapons.

As a Russian-American who grew up in the Soviet Union, Doronichev’s fears crystalized last year, when Russia attacked Ukraine.

“I’ve seen how Russian society was moved by propaganda and completely false narratives that cause people to attack innocent individuals in their homes,” he said. “It’s a horrific reality of today that has had a deep personal impact on me.”

While Doronichev got into big tech to liberate information, he left it to authenticate information. He saw the signs of society starting to splinter, partly because of his own doing. The fundamental existential threat to humanity, Doronichev believes, is technologies falling into the wrong hands that are used to manipulate and turn neighbors into nemeses.

“If humans don’t have a way to tell the truth from lies, we stop living in the existence of truth and that’s the scariest part,” he said. “What might happen to the Internet is that, soon enough, we won’t believe anything we see online. It’s really important we defend the idea of truth.”

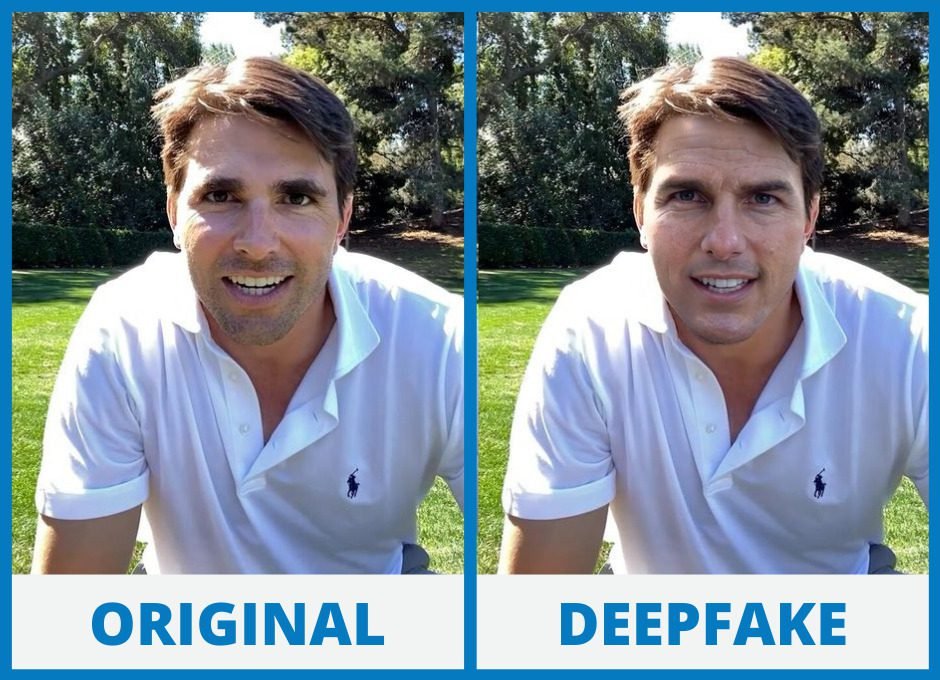

What Doronichev saw play out with social media is now happening with artificial intelligence (AI). One question that keeps him up at night: “How can I tell if something is created by a human or a machine?”

Fighting fake as the default

In March 2022, Doronichev launched Optic, a startup that created technology that determines the authenticity of images, videos and voice, instantly determining between real and fake.

Optic hit the right notes – mid-Ukraine war, post-NFT boom, and pre-DALL-E and ChatGPT hypnosis.

“Blockchain guarantees uniqueness and immutability of the ledger record, but it has nothing to do with the contents of the document itself. An extra layer of infrastructure is required to determine whether the image or video is real, AI-generated, stolen or contains copyrighted materials,” Doronichev said.

Optic found its early footing in the niche NFT space – when authenticating an image started to have a clear monetary value. “Our technology detects millions of fake digital items per second for marketplaces such as OpenSea,” he said. “In total, we’ve discovered over 100 million counterfeit NFTs.”

The use case for Optic, however, is much broader. Doronichev envisages Optic being leveraged by dating applications and social media platforms to authenticate profile photos and protect users.

“Our thesis is that things are about to get an order of magnitude hotter for humanity. Until now, there was a proliferation of distribution platforms and recommendation systems by AI. But today, we’re entering the era where content itself is going to be generated by AI. Anyone, anywhere, can now dream up anything in photo realistic quality and instantly distribute it to the whole of humanity,” he shared.

What’s changed in the past year, Doronichev said, is the cost and accessibility of this AI technology.

“State-sponsored election meddling has happened before, but it took thousands of people employed by troll farms,” he said. “Think of what they can do now with AutoGPT. You can have hundreds of thousands of AI agents generating hyper-targeted banner ads and running campaigns to unprotected groups in society. The playbook developed by Cambridge Analytica is now democratized in the worst type of way.”

All the focus is on making it easier to create more, with little engineering efforts on the side of trust, safety, authenticity and moderation.

The historical record of an image

“Optic analyzes media assets to discover where they came from. Did it originate from a human or a machine? Was it something that was IP-protected and copyrighted years ago? Does it contain malice, violence, porn or harmful content? Optic answers all these questions about every media item on the Internet,” he said.

“Imagine you have a magical tool that every JPEG you could look at through a magnifying glass and get all these answers. For example, it first appeared as a photograph in the New York Times, then got machine-altered in Photoshop, and later fed into DALL-E in this region and the background was changed.”

Discerning what is and isn’t real is imperative to a functioning society – for individuals, companies, countries, and the greater humanity. When reality is skewed, so is our connection with others. This leads to damage we can’t even conceive.

The tech entrepreneur feels blockchain technology plays an important role, by saving information in an immutable way to certify authenticity.

“Imagine a future digital photograph blockchain that contains every real image taken by a camera in one trustless ledger. So, anytime you take a photo with an iPhone, it records a cryptographic signature of every image. Imagine if we could do that for every camera in the world in an immutable way,” he said. “This is what we see in the long-term, where immutability and cryptographic proof of work is used to identify a reasonable authenticity.”